The use of AI in the marketing sphere has exploded since Chat-GPT and DALL-E became freely available to the public. It’s up to global marketers to know how to safely and responsibly implement AI-generated content into their work, and this includes proper labeling.

Labeling AI-generated content has become a huge discussion topic. While some believe that AI labels are necessary to combat misinformation, others see AI as a tool like Photoshop that shouldn’t be demonized.

There’s a lot to consider when labeling AI-generated content. We’ll guide you in choosing the best approach for your company, from the most effective ways to label content to determining whether AI-assisted content needs a label at all.

The rise of AI in content creation

While AI only came to be widely used in 2022 with the stable release of Chat-GPT, its adoption in the marketing industry has been swift and overwhelming. In a 2024 survey of digital marketers, 75.7% use AI tools for work, and 98.1% think that understanding AI is important for their job.

Beyond individual apps like Chat-GPT, AI is increasingly integrating into existing apps and workflows. Microsoft Office, Adobe Creative Suite and Canva are just a few of the programs that add AI features to their products.

The impact of AI in the content creation sphere is huge and has completely reconfigured how many marketers and content creators operate in the industry. From creating more content more quickly, to hyper-personalized ads, to AI-driven analytics, it has allowed marketers to speed up processes while reducing human error.

AI can help with every kind of content you can think of. It can help brainstorm article ideas, create customized responses in a chatbot for user questions, generate images and videos in a consistent tone or style, and even choose the best spots to put SEO keywords in an article. It’s easy to see why its use has grown so exponentially in such a short time—it can take care of all the “boring and tedious” aspects of content creation, allowing you to focus on strategy.

AI content analysis: Tools to measure marketing performance

AI is not going to replace marketers or do their jobs for them completely, however. It can generate content millions of times faster than a human, but without human refinement, it may lose context, nuance and emotion, resulting in an uncanny “AI feel.”

“AI is not human—it doesn’t think, work or understand as we do,” says Naomi Bleackley, Editorial Account Manager and AI specialist at VeraContent. She explains that while its responses may appear fluent, this is because of its training on vast amounts of data and its ability to predict the next word based on probability. However, AI lacks genuine comprehension and relies on statistical patterns rather than true understanding.

Relying only on AI to create marketing materials can make customers feel wary. A 2024 survey across 17 markets found that about half of consumers are uncomfortable with brands using AI to create digital brand ambassadors, as well as generate or edit images used in advertising. The survey found that this discomfort also extended to images of products. However, customers had a harder time determining if an image of a product was AI-generated or not.

A still-sizable 41-42% of people in that survey were uncomfortable with AI generating product descriptions and taglines and determining ad placement in any media channel. And more people can determine that copy is AI-generated than you may think. In another 2024 study of 1,000 Americans, respondents of all ages could identify what content was AI-generated about 54.6% of the time.

Perhaps unsurprisingly, younger people (18-24-year-olds) were better at judging if something was AI-generated. Surprisingly, respondents were slightly better at identifying AI-generated copy (57.3%) than images (53.4%). This may be due to AI copy’s direct and mechanical tone and sometimes awkward phrasing.

In various studies, people usually were less trusting of content tagged as AI-generated, even if it’s true or a person completely made the content. In a 2025 study on AI use and its effect on customers’ brand perception, customers in an imagined scenario received a sincere letter from a salesman who helped them buy a new set of weights.

Participants who were told the salesman wrote the letter himself responded favorably to the letter, while those who were told that the letter was AI-generated felt it violated their moral principles. They were also unlikely to recommend the store to others and more likely to switch brands when making a future purchase. They even gave the store poor reviews on a simulated reviews site.

Customer opinions on AI showed nuance. Factual communications, rather than emotional ones like the letter, were far less likely to trigger negative reactions. People responded more positively to AI-generated messages signed by a “sentient” chatbot than those signed by a real company representative. Additionally, messages that were only AI-edited, not fully generated, faced less criticism.

See also: Generating quality global content at scale using AI tools

What causes the consumer to dislike AI?

There’s a myriad of reasons: fear of the unknown, media overhype, knowing that AI can suffer hallucinations, fear of job loss or media manipulation, privacy and environmental concerns, and biases inherent in AI due to the biased data it’s trained on.

“The public’s mistrust comes from AI-generated content feeling so human that it’s confusing. Discovering something that is AI-made can feel deceptive, as we’ve long assumed content is human-created. It’s similar to previous reactions to Photoshopped images—people felt it was ‘cheating.’ With AI taking over more of the creative process, it raises questions: ‘How do I know what’s real anymore?’ This uncertainty is likely the core issue,” said Naomi.

While some of these concerns and inherent negative reactions may lessen as people adjust to AI’s everyday use, how can marketers reconcile AI’s powerful capabilities in content creation with consumers’ mistrust and dislike of AI? That’s where labeling (might) come into play.

Want to learn more about AI in the marketing industry? Check out our podcast episode where Naomi, Keegan Gates, and Shaheen Samavati discuss how to best integrate AI into the content creation process.

Why labeling AI-generated content matters

Labeling AI-generated content appears to be the current “solution” to the brand/consumer divide over AI use. And its application is important for a myriad of reasons: brand reputation, campaign success and even legal obligation.

In a 2024 survey, 94% of consumers said all AI content should be disclosed. However, consumers were split on who should be disclosing. 33% said that it should be the brand’s responsibility, 29% said it should be the hosting social network’s responsibility, and 17% thought that brands, social networks and social media management platforms should all be responsible.

Using AI to generate content can raise some ethical considerations you should take into account as a marketer:

- Should consumers be explicitly informed that they’re talking to a computer and not a real person?

- How does your brand remain authentic when a machine helps develop your brand voice?

Labeling makes sense. Customers don’t want to feel lied to, or like a brand is hiding something. When customers recognize AI-generated content but don’t see it disclosed, they may question both the content and the brand. To maintain trust, establish a clear framework for deciding when to label content as AI-generated.

Clearly labeling AI-generated content can build trust with your audience by showing your brand’s commitment to honesty and integrity. It can also differentiate the brand as one that engages in ethical content creation practices, and enhance engagement by starting a conversation about AI that can lead to curiosity and interest instead of aversion.

Beyond voluntary ethical reasons, labeling AI-generated content may become legally required on social media, depending on the market you’re in. In 2023, lawmakers introduced a bipartisan AI disclosure act in the US Congress. In the UK, officials proposed an AI regulation bill to establish an AI authority for better oversight.

Then, in March 2024, the European Parliament passed the EU AI Act. It will be fully applicable in March 2026, but some parts will be applicable sooner. The act establishes risk levels for AI systems that affect the level of transparency required in their use. While generative AI isn’t classified as high-risk, it has to comply with EU copyright laws and the act’s transparency requirements, which include disclosing AI-generated content.

As AI is still in its early stages, governments are still figuring out what best practices should be in place to regulate AI and its use in different parts of society. If you’re using AI in your marketing, make sure to keep up to date with the latest developments, so you know you’re always remaining compliant.

How to label AI-generated content effectively

Effective labeling of AI-generated content can vary wildly, depending on what kind of content it is, how exactly AI was involved, and what platform the content is posted to. As an organization, it’s helpful to set clear disclosure guidelines for each type of content so your disclosures are consistent.

Many social media platforms offer native ways to disclose AI use in your posts:

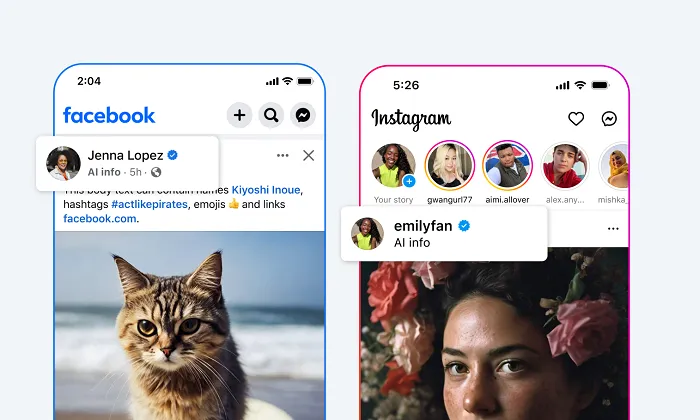

- Meta (Facebook and Instagram): Posts, reels and stories can all be labeled as having AI content. In each case, adding the label is simple, though it can only be done on mobile devices. In a post, click the dropdown menu next to AI Label off and turn on the Add AI label switch. On reels, this switch is on the publishing screen, and for stories, it’s in the story settings.

When you label your content, it will have a label in the corner that says “AI info.” When users click or tap that label, they can see how AI could have been used to create the image or video in the post.

Meta may also flag your content with this label if it detects that your content has been created with AI, whether you choose to disclose that or not. Meta will also say whether the label was self-disclosed or imposed. For content modified or edited—not created—with AI, the post’s menu will have an AI info label.

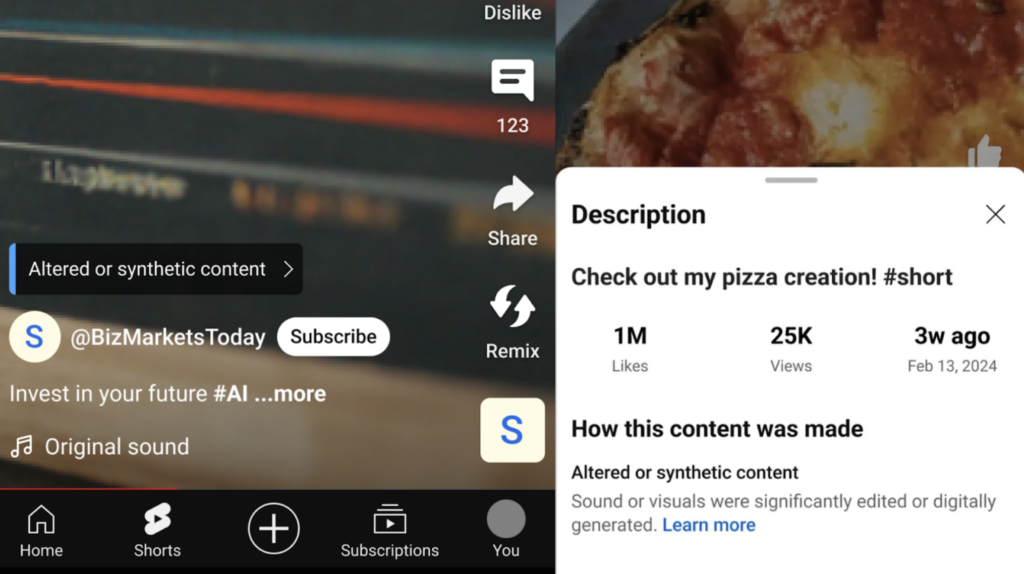

- YouTube: In the YouTube creator studio, there’s a checkbox you can see when uploading a video to self-label it as containing AI-generated material. YouTube mandates labeling if the content is extremely realistic-seeming, and will also add this label automatically if the content is realistic or involves sensitive topics. The AI label will appear in both the video and the video description.

Content that is considered “clearly unrealistic,” like animation or a dragon, doesn’t have to be labeled under YouTube’s guidelines. The label also isn’t mandated if you use AI for things like lighting or beauty filters, or to blur backgrounds.

- TikTok: Like the two previously mentioned social media companies, TikTok offers a label for AI content, where users can disclose if the content was completely AI-generated or significantly edited with AI. Under more options when posting a video, you can turn on the AI-generated content switch.

TikTok also has a policy that automatically labels posts that it deems to have been either created or heavily edited with AI. A creator-added label is visually distinct from an automatic TikTok label.

- LinkedIn: LinkedIn, in partnership with the Coalition for Content Provenance and Authenticity (C2PA), has pioneered a slightly different approach from other social networks. Instead of only labeling AI-generated content as such, the platform has suggested that all posts be labeled.

Clicking on the label would display content credentials, content source (like which AI tool was used to help generate the post) and other information. However, this label is only available if your content contains C2PA credentials. If not, you should manually disclose your AI content.

While these tools are helpful in certain situations, what if your content isn’t posted on one of these platforms, or you want to provide your own context about AI-generated content? Labeling content as AI-generated directly may make your labeling more effective and comply with higher standards and best practices.

There are many different ways to label AI-generated content, depending on the type of content and where it’s being posted. Some of the most common methods are:

- Including a small overlay within an image or video, usually in the bottom right-hand corner. This can be simple text like “AI-generated” or you could design a small graphic that incorporates this text in a more visually appealing way. Meta, for example, has a small graphic they put in the corner of all of their AI-generated posts.

- Adding a label in the text or caption of a post can allow you to add more context or integrate the label more naturally. For example, you could use a caption like “Explore our AI-generated vision of the perfect mountain retreat for your next getaway.”

- You can also include hashtags in a social media post indicating that it’s made with AI, and use the same one to maintain brand consistency. For example, Meta tags their AI posts with #imaginedwithai.

- For articles or blog posts that were created with the help of AI (we don’t ever recommend fully AI-generated articles), you can add a simple disclaimer at the end of the article. A good example is: “Disclaimer: This article was created with some help from AI, but edited, reviewed and fact-checked by a real person.”

- When posting partially AI-created articles, it’s a best practice to not include a specific author’s name. Don’t say that “AI” was an author, either, as people can take that to mean that it was completely made by AI, even if you include human authors on the byline as well. It would be better to say it was written by “the team” or another generic placeholder, maybe with a small AI disclosure near the byline. For example, Buzzfeed publishes AI-generated articles under the name “Buzzy the Robot.”

Regardless of how you choose to label your AI-generated content, the most important thing to do is keep up to date with best practices, regulations and ethical considerations, and meet with your team(s) regularly to discuss your approach.

What wording should you use to indicate that your posts are AI-generated? A 2023 study surveyed people in five countries—the US, Mexico, Brazil, India and China—across four languages. Participants viewed 20 labeled images with nine randomly assigned terms and then identified whether the images were AI-generated or intentionally misleading.

The terms AI-generated, Generated with AI, and AI Manipulated were the most effective in signaling AI involvement without implying deception. Respondents were least likely to view content as misleading when labeled with these terms. In contrast, edited, synthetic and not real were the least effective in conveying AI generation.

When producing global content, you also have to keep in mind that posting different languages may affect how you word your AI content labels. For example, in the previously mentioned study, researchers found that Chinese speakers associate the word “artificial” with human involvement, while English, Portuguese and Spanish speakers associate it with automation.

As with many aspects of global marketing, we recommend working with local linguists to determine which labels are most effective in different markets. You may need to test different labels in a target market to determine if the label is effective and resonates with that audience.

Regardless of how you choose to label your content, Naomi emphasizes the importance of cohesion:

“Brands, especially larger ones with wide audiences, should have an internal policy to maintain ethical standards and consistency. This ensures clarity on how to handle AI-related content and avoids ambiguity. Additionally, they should communicate this policy clearly with any agencies, creators or partners they collaborate with.”

When and how to avoid AI-generated content labels

Labeling AI-generated content is the current “best” way to make AI content palpable for consumers, but the practice comes with criticisms and limitations. Although we advise labeling most AI-generated content you post, there are actually some situations where it’s unnecessary.

AI labels are supposed to be neutral descriptions explaining what technology was used to generate or modify a specific piece of content. However, many consumers perceive them as indicators of whether the content is true or false. Sometimes, real images with cosmetic or artistic edits facilitated by AI are triggered to be labeled as AI content on social media platforms, which can cause problems for those accounts.

Overall, users expect the most amount of transparency, in this case labeling, for content that is completely AI-generated, is photorealistic, depicts current events, or includes real people doing or saying things they didn’t do or say. Many people agree that AI-generated and AI-edited content should have different labels on social platforms.

In many situations, you’re not using AI to completely create and generate your content. Most marketers use AI as a tool to aid in the content creation process. As previously said, we always recommend that you review, edit, and fact-check any AI-created content before posting it to make sure it’s high quality, correct, and in line with your brand standards.

Naomi highlights that while labeling AI-generated content should be standard, the real challenge is determining where to draw the line between AI-generated and AI-assisted work. “People have different opinions, so there’s no clear-cut answer,” she notes. For instance, with a blog article, AI might help create a structure or draft, which is then edited—so is it AI-generated or just AI-assisted?

“I think if you’re using AI for brainstorming or rewording a sentence, but you’re putting in the effort and the final work feels like your creation, it shouldn’t need to be labeled as AI-generated,” Naomi adds, but she adds that others argue that any AI input should be labeled. “While labeling AI-generated images should be standard as they are at their core AI-created, written content remains a gray area, making it a tough call.”

How much you want to disclose AI involvement in your content creation process is ultimately up to you and your team. However, there are some common situations where it may not be necessary to label AI content:

- AI-edited photos: Many image editing apps, like Photoshop, have AI tools built into their programs. Generative fill, for example, is a tool in Photoshop where you type in what you want to add or remove certain elements from an image, like removing a hand or adding a water drop to a leaf. While these tools use AI, the pictures and images they’re being used on are still primarily human-generated.

If generative fill (or other tool you’re using) modifies the image but doesn’t change it substantially, AI labeling isn’t necessary. After all, most images used in advertising these days are edited in some way using Photoshop, and its use isn’t always disclosed. Be careful though, as some social networks have been known to slap an AI-generated label on something that was only mildly retouched using AI tools.

- Research and brainstorming: Many writers and content creators use AI to help them generate ideas on a topic or compile research from sources fed into the program. If the actual article that results from this work is completely human-written and -edited, and the information is reliable and objective, AI labeling isn’t necessary.

If you think it’s a good idea, you could disclose your editorial process either at the bottom of the article or on your About Us page on your website. But a big AI-generated tag isn’t necessary and may lead to people unnecessarily distrusting your content, particularly if the article is attributed to a human author.

- Functional copy: Content that doesn’t involve creative expression, like summaries, titles, metadata descriptions, and generic functional web and app copy, has long been machine-generated. As outputs of these types of copy are predictable, generating them with AI is normal and won’t affect the quality of the actual work. Specifically labeling them as AI-generated isn’t necessary, either.

- Be careful with derivative content: Content reconfigured directly from someone else’s work should be approached the same way it was before we started regularly using AI. For example, suppose you’re creating a table or graph based on data from a copyrighted study. In that case, you should include a notice and properly attribute the source to avoid any accusations of copyright infringement.

However, short derivative content based on your own work may not need to be labeled as AI-generated. For example, if you feed ChatGPT a list of successful email subject lines that your company has used in the past and ask it to generate new ones based on the ones on that list, you wouldn’t need to disclose those subject lines as being AI-generated.

Striking a balance: Blending AI with human creativity

AI isn’t getting rid of marketing jobs, and it’s not a substitute for human creativity. On the contrary, it takes care of more boring or repetitive tasks and lets humans focus on the more creative aspects of their jobs. AI works best when you see it as a partner in your work, and not your enemy or servant.

Naomi highlights the value of ChatGPT “as a tool for generating ideas or outlining articles.” She emphasizes, however, that “it’s not meant to produce the finished product—we prioritize keeping the human element in writing.” It can refine drafts by identifying ambiguous sentences and suggesting improvements, although it’s up to a human editor to decide if the suggestions are better than the original copy. “When used effectively with the right processes, it has the potential to boost efficiency and quality,” she said.

By blending a smart use of AI with content that reflects your company’s voice and expertise, you can successfully create AI content that resonates with your customers. You’ll still need human editors to verify facts and quality and to add the “human element” that can provide extra emotion and persuasion.

To keep AI-generated content seeming as real as possible, you should:

- Use AI to generate ideas, but add your own thoughts, feelings, and strategies

- Review any AI-created content to make sure it’s still in your brand voice

- Fact check if using any factual information obtained from AI, as AI is known to generate facts and quotes

- Inform users when AI tools were used substantially in the final content creation

- Keep learning and stay up-to-date on the latest updates in AI technology and regulations

“At Vera Content, we view AI as a tool, a friend and a helping hand—something to make our jobs easier and our work better. While many fear AI might replace jobs, our approach is to use it to assist linguists and enhance the consistency of our content,” says Naomi.